Big Brother Has Terrible Eyesight

How the feds are using and abusing facial recognition software.

For as long as police have been using facial recognition technology, activists and scholars have sounded the alarm about civil liberties concerns about its use. As it turns out, new testimony from the Government Accountability Office proves that these concerns are well-founded.

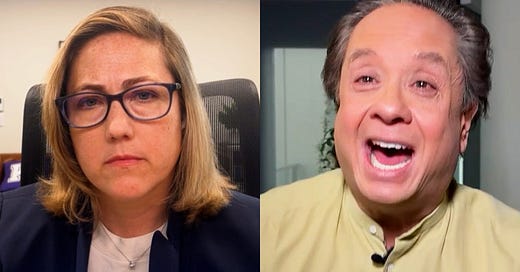

Back in 2016, GAO found that the Department of Justice (DoJ) and the Federal Bureau of Investigation (FBI) lacked proper privacy safeguards and had done little to test the accuracy of their facial recognition databases. At the time, GAO made six suggestions to address these issues, including crafting strict guidelines for what type of data could be collected and from whom, implementing stronger data storage protections, and assessing the accuracy of facial recognition tools before deploying them. Three years later, as the new testimony from GAO’s Gretta L. Goodwin shows, the FBI and DoJ have really implemented only one of the six, ensuring that officials are conducting face image searches in accordance with DoJ policy. They’ve done little, if anything, to address GAO’s larger concerns about data collection, data storage, and the accuracy of these tools.

So the FBI is continuing to use its facial recognition database, which includes mugshots and other biometric data on more than 50 million people—and is prone to error. The Department of Homeland Security, too, is continuing to build out a biometric database that it hopes will one day include information on 500 million people, many of whom won’t even be suspected criminals.

Law enforcement agencies shouldn’t be allowed to use this technology in their work until they can, at the very least, meet the government’s own standards on privacy and accuracy.

According to GAO, the FBI didn’t assess the accuracy of its facial recognition tool before deploying it, didn’t assess the accuracy of external partner tools, and didn’t ensure that its facial recognition system met “user needs.” This lack of concern for the accuracy of its own database or the databases of the 21 states who share facial recognition data with the FBI raises serious concerns that these tools might just turn up “false positives.” You can image the problems that arise when law enforcement officers use these tools to make arrests.

When FBI officials search for a match in their facial recognition database, called the Next Generation Identification (NGI) database, they see 50 “potential matches” pulled from the massive database, which includes 6 million faces from non-criminal sources. In 2014, TechDirt found that the correct photo is included in the list of 50 potential matches only 80 percent of the time. This leaves the door wide open for potential false positives—and some super unfair incarcerations.

Even among some of the algorithms with higher accuracy rates, the results are still troubling. Amazon’s facial recognition tool, which includes facial data on tens of millions of people and has been sold to many local law enforcement agencies, misidentified 28 members of Congress as suspected criminals. (Insert your own obvious joke here.)

For folks with darker skin, this rings particularly true. A 2018 MIT study found that for “darker-skinned males” there was a 12 percent chance the algorithm wouldn’t even get their gender right, and for darker-skinned females that number was as high as 35 percent. How can law enforcement be trusted to use this technology when it often can’t even get a suspect’s gender right?

Even beyond the concerns with false positives and wrongful incarcerations, there are still other things in the GAO report we should probably worry about. For example, they found that the DoJ didn’t produce two very important reports: privacy impact assessments (PIAs) or systems of record notices (SORs). PIAs lay out how facial data is collected, stored, used, and managed, while SORs inform the public exactly what type of data is being collected by DoJ. The feds’ lack of transparency leaves the American people in the dark, raising due process concerns — especially considering DHS’s intent of creating a 500 million face database.

The report also mentions that the FBI collaborates with local and state authorities in 21 states where facial recognition is used. But this includes more than just criminal mugshots — it also includes driver’s license photos. Without proper PIAs in place, there’s no way to ensure that the FBI isn’t collecting facial data on innocent Americans, and there’s no way to ensure they properly store or delete such data when they do get a hold of it.

GAO’s troubling report is a clear example of federal law enforcement taking an “act first, ask questions later” approach to policing. But the public needs to pay attention and hold its feet to the fire. Until the FBI and DoJ can prove they have accuracy rates that would make sure they aren’t putting innocent people in jail and that they have proper privacy safeguards in place, then they have no business using facial recognition technology. Tell your friends.